We are making a robot play chess against a human, and thus we need to see the moves the human makes.

The most intuitive is to take an image before the human move

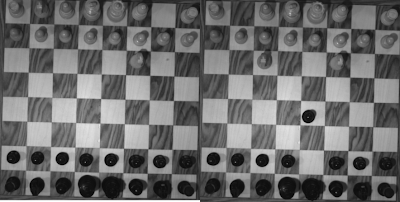

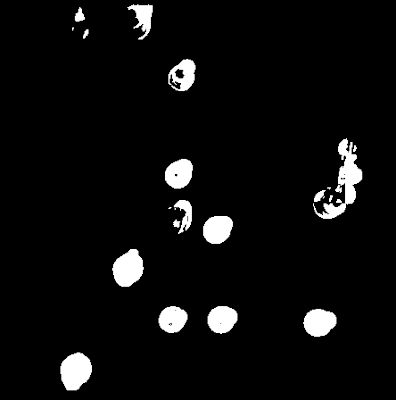

The two columns shows a black pieces move, the left column shows a black pawn move from a white square to another white square, the right column shows a black pawn move from a black to a black square.

First we have before the move

After the move

After the move An the difference.

An the difference.

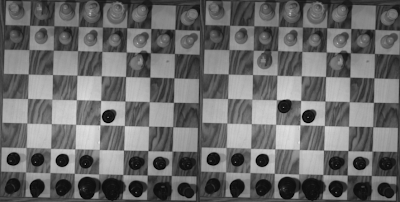

The above little test shows that it should be easy to detect piece movement (It works equally well with white pieces).

Obviously things look different when there are changes in illumination, we are designing this system to be used for exhibitions, therefore we assumes that there is going to be some changes in the global illumination, although we will set up light and provide a somewhat stable illumination basis. The following two images where taken within a few minutes.

and the difference:

Two thing are worth noting here, first off all, there is visible changes in the light at locations where there are no changes in respect to piece content. Furthermore, the white knight moved from [G1], is rather difficult to see.

Two thing are worth noting here, first off all, there is visible changes in the light at locations where there are no changes in respect to piece content. Furthermore, the white knight moved from [G1], is rather difficult to see.Thresholding the image yields:

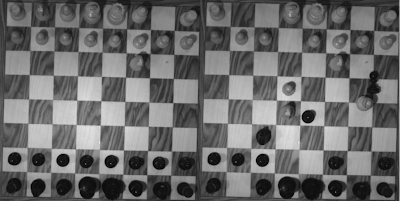

This test until now points toward a possibility of only using changes in intensity between frames for detection of where pieces move from and to

This test until now points toward a possibility of only using changes in intensity between frames for detection of where pieces move from and toI really find it difficult to believe that this simple approach is stable when global illumination changes more than what I have sample images for right now. To compensate for the illumination problems, I hope that the difference off edge images of before and after are more robust for global light illumination, lets see how it looks.

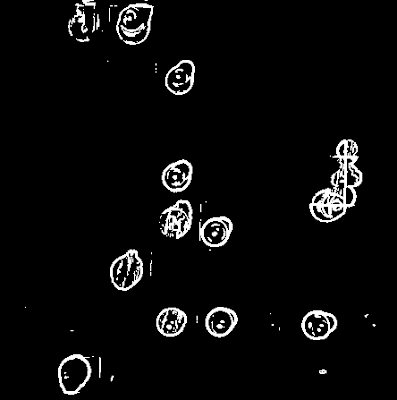

first the difference of the two black pawns for comparison:

Is is easy to see that once again detecting what pieces have moved should be doable.

Is is easy to see that once again detecting what pieces have moved should be doable.Now on the image with the illumination problems, I have thresholded the images right away,

and it seems like it should be possible to find the pieces. Note that there are pixels which are noise, they can be removed in some different ways, by smoothing the image multiple times before edge detection, some of the pixel noise can be removed, as the following image shows:

and it seems like it should be possible to find the pieces. Note that there are pixels which are noise, they can be removed in some different ways, by smoothing the image multiple times before edge detection, some of the pixel noise can be removed, as the following image shows: Actually, From the test I have made right now, it seems like the Intensity image can be used for reasonable performance, the problem I currently can see is that in the thresholded image, finding an circle at the knight location seems non trivial and finding the circles are rather important since this is probably what we are going to use a input for the robot.

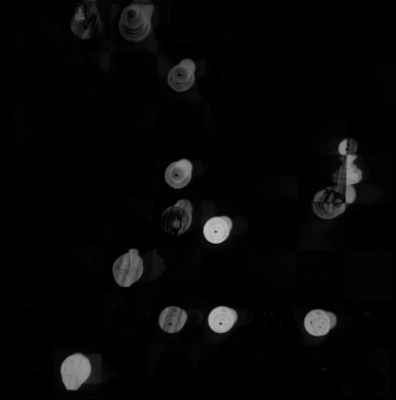

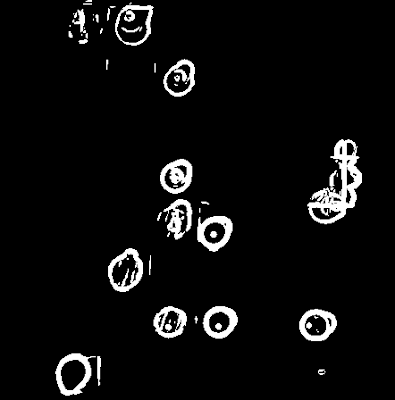

Actually, From the test I have made right now, it seems like the Intensity image can be used for reasonable performance, the problem I currently can see is that in the thresholded image, finding an circle at the knight location seems non trivial and finding the circles are rather important since this is probably what we are going to use a input for the robot.A tuned circle Hough transform reveals the following circles in the problematic images using intensity and a static threshold of 0.1 (intensity in the range [0:1]), the read areas are where the pieces where before, and the yellow are where they are after. The Hough transform has been tuned to not making any false negatives.

From the above image, we can see that the size of some of the circles doesn't fit with the size of the pieces. I expect that further processing can find the correct size, and also negate false positives

Using the thresholded difference of edges as input for the hough transform yield:

Once again, the parameters off the hough transform was tuned to make no false negatives, but to capture all positives, far more false postives are produces compared to using the intensity difference image. The sensitivity off the hough transform was actually slightly decreased, but the image was smoothed several times for achieving the desired result.

Conclusion: I would say that both off the discussed methods looks promising for identifying areas off interest for more complex processing.

The following problems need to be solved next:

- Investigate how this works when illumination changes

- Kill false positives

- Identify the square that the change are in so the chess engine can be informed about the move

First we detect the area of interest by threshing the difference of edges

Then we dilating with a 3x3 square kernel 9 times and find connected component in the dilated image, components with smaller width or height than an pawn radius are discarded. We are only interested in pieces which are moved between squares, evaluating

This leaves us only with the squares pieces have moved from and to which are handled by logic.